Virtualization has been one of the most transformative innovations the world of IT has offered us. Storage virtualization simplifies how data is stored, managed, and accessed. It works by abstracting physical storage into a unified, flexible system. But to understand storage virtualization, it is important to also appreciate server virtualization and why the idea of virtualization came into being.

In this guide, we explain this and also go in-depth with how virtual machines are created and managed, their operating principles, and the types of hypervisors and virtualization servers.

Introduction to Server Virtualization

Before virtualization became commonplace, organizations had to deploy a dedicated physical server for each application, such as print servers, email servers, and database servers.

This approach forced businesses to underutilize their hardware, resulting in increased costs and overcrowding in data centers.

To solve this problem, IBM invested in developing resource-sharing (virtualization) solutions for mainframes in the late 1960s and early 1970s. Then in 1999, VMware revolutionized the industry by bringing virtualization to the x86 architecture.

The key technology behind this transformation is called a hypervisor. It is a software layer that sits between the hardware and the operating systems and manages resources.

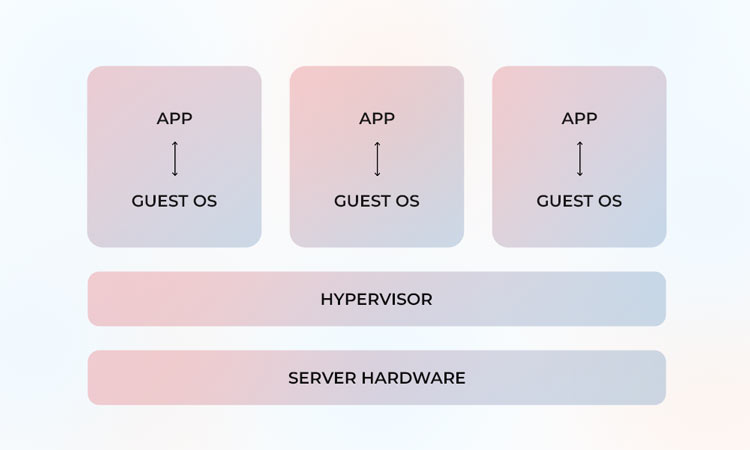

The fundamental architecture of a virtualized server is as follows.

- The server is the bottom layer, consisting of CPU, primary memory (RAM), and secondary memory (SSD or HDD).

- Then, instead of running a single operating system, a hypervisor, or a middle layer, virtualizes the hardware resources and allocates them to multiple virtual machines (VMs), which can be imagined as the top layer.

So, each VM gets its own virtual CPU, virtual RAM, virtual storage, and virtual network.

Virtualization, as we understand it today, has come a long way since the 60s.

Initially, virtualization did not address storage challenges, and each virtual machine's data was still tied to a single physical machine. This meant that whenever the hardware failed, applications and data were lost.

To overcome this, technologies like VMware's vMotion and Fault Tolerance were introduced. These technologies enable live migration of VMs between servers.

For VM mobility and failover, a shared storage network, SAN or NAS, is required. This is where storage virtualization becomes important.

So, while server virtualization abstracts computing resources, storage virtualization abstracts data storage and allows IT teams to build scalable, flexible, and fault-tolerant environments.

In the next sections, we will understand how virtualization works, its different types, and how server and storage virtualization complement each other. We will also understand the benefits and challenges of these approaches.

Operating Principles of Virtualization

Virtualization is made possible by the hypervisor. It is a lightweight software layer installed on a physical server, which abstracts the underlying hardware. It also enables the creation of multiple virtual machines.

Each VM operates as an independent system with its own operating system, applications, and virtualized hardware.

There are two types of hypervisors:

- Type 1 Hypervisors, or Bare Metal: These are installed directly on the physical server and replace the host’s OS. Type 1 hypervisors are used in enterprise environments and data centers as they provide better performance, security, and resource efficiency. Examples include VMware ESXi, Microsoft Hyper-V, KVM, Oracle VM Server, and Citrix Xen Server.

- Type 2 Hypervisors: These are hosted and run on top of an existing operating system as software. They allow running multiple OSs on the same PC. Examples include Oracle VirtualBox, VMware Workstation, and VMware Fusion.

How Virtual Machines Are Created and Managed

Once the hypervisor is installed, an administrator can create virtual machines by allocating virtual resources from the physical host to them.

Each virtual machine is assigned a vCPU, or virtual CPU, which is a portion of the physical processor's computing power; a vRAM, or virtual RAM, which is a slice of the host's total memory; a virtual storage, which is a virtual hard disk drive (for example, VMDK for VMware and VHD for Hyper-V); and lastly, a vNIC, which is a virtual network interface card, a software-defined network adapter. Here is an example.

Let us assume we have a server with 24 CPU cores, 96 gigabytes of RAM, 1 terabyte of storage, and dual 10 GbE network adapters, and it has to host three virtual machines. This is how these virtual machines may be created:

| VM Type | vCPU | vRAM | vStorage |

| Web Server | 8 | 16 GB | 200 GB |

| Database Server | 8 | 32 GB | 400 GB |

| Application Server | 4 | 16 GB | 200 GB |

The hypervisor can dynamically schedule workloads across the physical hardware and ensure that resources are used efficiently while maintaining isolation between virtual machines.

Types of Server Virtualization

We understood how server virtualization allows multiple workloads to run on a single physical machine. This is done by abstracting hardware resources.

However, different virtualization methods exist because every method is a trade-off between performance, compatibility, and VM isolation.

Early virtualization efforts had to balance these three factors.

- Compatibility: Should any operating system be able to run without modification?

- Performance: Should we avoid virtualization overhead, i.e., overuse of CPU and memory resources?

- Scalability: Should virtualization be lightweight enough for cloud-native workloads?

Three major types of server virtualization evolved, each optimized for different scenarios. Let's understand these types:

Full Virtualization

This approach provides complete hardware emulation and allows guest operating systems to run unmodified.

The hypervisor manages all the interactions between the guest operating system and the physical hardware. This ensures strong isolation.

While this approach supports any operating system that runs on the hardware, it also introduces performance overhead due to the need to emulate privileged instructions. Some examples are VMware ESXi, Microsoft Hyper-V, and KVM.

Para-Virtualization

This approach optimizes performance by making the guest operating system aware that it is running in a virtualized environment. So instead of hardware emulation, the guest operating system communicates with the hypervisor using specialized hyper-calls.

This reduces virtualization overhead but also requires operating system modifications or specialized drivers. Xen in para-virtualized mode and KVM with virtIO drivers use this method.

OS-Level Virtualization

This is also called containerization, and it takes a different approach that eliminates the need for separate hypervisors. Instead, a single operating system kernel is used to run multiple isolated instances (each of which is known as a container).

Because of this approach, IT admins can achieve efficient resource utilization and rapid scalability. However, this approach requires that all containers use the same OS kernel. Technologies like Docker, Kubernetes, and LXC make this method ideal for cloud-native applications and microservices.

Conclusion

Server virtualization has come a long way—from IBM mainframes in the 1960s to today’s multi-hypervisor, cloud-native data centers. By letting one box behave like many, it cuts hardware spend, slashes downtime, and makes scaling as easy as clicking “add VM.”

About The Author

Online Marketing Expert & Content Writer