Summary

- A SAN RAID failure is a critical enterprise event that can halt multiple applications and virtual environments that depend on its centralized block-level storage.

- Watch for SAN RAID failure symptoms across your entire infrastructure. These include signs like inaccessible LUNs on host servers, errors within the SAN fabric, and explicit "Degraded" or "Offline" statuses on the storage array.

- Your immediate response is the most critical factor: safely contain the problem by halting I/O, investigate the full data path (not just the array), and meticulously document the SAN RAID’s state before making any changes.

- Avoid DIY recovery. Proprietary RAID algorithms are used in enterprise SANs. This means that standard data recovery software can cause irreversible damage and can turn a recoverable situation into permanent SAN RAID data loss.

- When facing complex issues like controller or multiple drive failures, the only safe path is to engage professional SAN RAID data recovery services for a controlled, forensic recovery.

In a storage area network (SAN), RAID (full form: Redundant Array of Independent Disks) is the safety net that keeps critical data online even when individual disks fail. But when that net gets torn, the impact is huge.

A SAN RAID failure doesn’t just take down a volume; it can stall dozens of servers, freeze VMs, and disrupt entire business applications.

This is why IT teams need a clear view of:

- how SAN-based RAID issues show up,

- why they happen, and

- what immediate steps can protect data before recovery begins.

In this guide, we’ll focus on RAID failures inside SAN environments and connect the dots from early warning signals to secure SAN data recovery.

Symptoms of SAN RAID Failure

SANs are built for resilience, but SAN RAID failures are not unheard of. Common symptoms of a failing SAN RAID setup fall into three categories.

1. At the Host and Virtualization Layer

This is where your end-users and applications will first feel the pain. The signs are commonly misinterpreted as server or software problems.

a) Inaccessible Storage

This is the most obvious symptom. Virtual machines may crash or freeze, databases become unresponsive, and entire datastores can disappear from your hypervisor management console (a condition often called “All-Paths-Down” in VMware environments).

b) Massive I/O Errors and Latency

Servers connected to the SAN will start logging a storm of I/O errors and timeouts. Applications will become incredibly slow as they wait for storage requests that never complete. This is a clear sign the back-end array is struggling.

c) Flapping Paths

Your multipathing software, which manages the redundant connections to the SAN, may begin to report paths constantly failing and being restored. This indicates instability in the connection, which often originates from a struggling storage controller.

2. Within the SAN Fabric

If your SAN uses Fibre Channel, the switches themselves can provide vital clues.

a) Port Errors and No-Light Conditions

Switch management interfaces may show a high number of error frames, or ports connecting to the storage array may simply go dark.

b) Zoning and Fabric Service Issues

In rare cases, a severely malfunctioning RAID array controller can disrupt the fabric and cause other devices to have intermittent connectivity issues.

3. On the Storage Array Itself

The array’s own management interface is your most direct source for finding the cause of SAN RAID failure.

a) Degraded or Offline Status

The array’s UI will explicitly report a RAID group or storage pool as "Degraded," "Rebuilding," or "Offline." This is an undeniable confirmation of a drive or controller problem.

b) Controller Failover Events

In a dual-controller setup, you might see logs indicating that one controller has failed and the other has taken over the workload.

c) Physical LED Indicators

Never ignore the lights. A solid or flashing amber/red light on one or more drive caddies is the array’s physical way of telling you exactly where the hardware problem lies.

Common Causes of SAN RAID Failure

Typical root causes include:

1. Multiple Disk Failures

Even enterprise-grade disks have finite lifespans. When one disk fails, rebuilds put stress on surviving drives. If another disk, especially from the same batch, fails mid-rebuild, the RAID group collapses. This is one of the most common triggers of SAN RAID data loss.

2. Controller or Firmware Problems

A RAID controller failure may occur if the controller crashes, loses cache protection, or carries a firmware bug, which can mishandle parity and corrupt metadata. In practice, it means the drives are fine, but the system no longer knows how to assemble them into a consistent RAID set.

3. Pathing and Fabric Issues

SANs rely on multiple fabrics and Host Bus Adapters (HBAs) for fault tolerance and managing risks. But if zoning, cables, or switch ports fail in the wrong pattern, entire RAID members can disappear from the array. When enough members go “dark,” the RAID enters failure even though the disks themselves are intact.

4. Human Error and Design Gaps

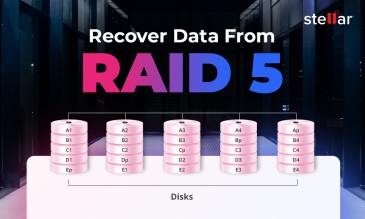

Untrained personnel pulling the wrong drive, propagating untested firmware changes, or building arrays with single points of failure can all lead to sudden SAN failure. Poor planning, such as using RAID 5 with very large-capacity drives, further increases rebuild risk and exposure to unrecoverable read errors.

Immediate Safe Responses After SAN RAID Failure

In the face of a potential SAN failure, your primary aim is to neutralize the chances of further harm. The actions you take immediately after the failure can determine whether your data is recoverable or not.

Step 1: Contain the Problem and Halt I/O

Before you try to fix anything, stop the bleeding. Your goal is to prevent any new write operations that could overwrite recoverable data or cause further corruption.

- If possible, gracefully shut down the servers and applications connected to the affected LUNs.

- If you cannot shut down, use your hypervisor or operating system tools to migrate critical services to a different, healthy datastore.

- At the very least, disconnect the affected hosts to prevent a constant stream of failed I/O requests from compounding the problem.

Step 2: Investigate the Entire Data Path

Don't immediately assume the array is the only problem. A SAN is a chain of components, and the issue could be elsewhere.

- Check the Fabric: Log in to your Fibre Channel or iSCSI switches. Are the ports connected to the array and hosts online? Are you seeing excessive errors?

- Check Host Connectivity: Look at the HBAs in your servers. Are they logged into the fabric? Is the multipathing software configured correctly and showing active paths? A simple faulty SFP transceiver or a bad cable can mimic a total storage failure.

Step 3: Document and Preserve the State

Before you change anything, document everything.

- Pull Support Logs: Log in to your storage array's management interface and pull a complete diagnostic or support bundle. Do the same for your SAN switches. These logs are invaluable for diagnostics.

- Take Photos: Take clear pictures of the front and back of the array; pay close attention to which drive bay has an error light and how everything is cabled.

Step 4: The Most Critical "Don'ts"

Avoiding the wrong move is important. Contacting the manufacturer’s helpdesk or attempting DIY repairs can seriously limit the chances of successful recovery.

- DO NOT start randomly rebooting storage controllers.

- DO NOT pull and re-seat multiple drives hoping it will fix the issue. This can prevent the array from ever recognizing its original configuration.

- DO NOT attempt to force a failed drive back online or initialize a new RAID group using the old drives. This is a destructive action that will erase the data.

- DO NOT run any file system repair utilities (CHKDSK, FSCK) on a LUN that has become corrupted. These tools don't understand the underlying RAID structure and can cause irreparable damage.

Know When to Engage Professional SAN RAID Data Recovery Services

When SAN RAID failures escalate beyond degraded states, the safest option is not trial-and-error but professional help. Attempting fixes in-house may seem faster, but once parity is overwritten or metadata is corrupted, even experts may not recover the original data.

This is why enterprises and SMBs alike rely on proven SAN RAID data recovery services.

At Stellar Data Recovery, our process is designed to protect every bit from the moment we receive the case.

- Direct consultation with an engineer to understand the SAN architecture, RAID level, and failure symptoms.

- Imaging of all physically damaged drives in Class 100 Cleanrooms to create bit-level clones before further analysis. This preserves the originals.

- Donor controller library to replace faulty RAID controllers or PCBs and regain access to RAID metadata.

- Virtual RAID reconstruction, where our tools rebuild striping, parity, and disk order without risking writes on originals.

- File system repair and data extraction from VMware datastores, Windows LUNs, Linux volumes, or database servers mapped to the SAN.

- Data integrity verification to ensure recovered data is consistent, complete, and usable.

Whether it’s data loss from a two-disk failure in a RAID 5 setup at an SME or an enterprise-wide SAN failure across multiple controllers, Stellar has the infrastructure and experience to deliver results. With expertise across Dell EMC, NetApp, HPE, IBM, and others, we are the trusted partner you need when your SANs fail.

Frequently Asked Questions

Repeated RAID server crashes often happen due to failing disks, firmware bugs, or controller faults. Without fixing the root cause, even rebuilt arrays can fail again.

No, a RAID failure doesn't always cause total data loss — unless you perform risky actions like rebuilding the array incorrectly for example rebuilding a degraded array with the wrong drive order or incorrect configuration, reconfiguring the RAID, or mishandling HDD replacements.

Not always. A rebuild stresses remaining disks. If another drive is weak, the RAID rebuild may fail and cause complete RAID failure instead of recovery.

About The Author

Data Recovery Expert & Content Strategist